GenAI (LLMs vs. Foundation Models): Difference Explained in Simple English

I have heard people use the terms “LLMs” (Large Language Model) and “Foundation Model” in the same context. They often used in the context of AI and natural language processing, but they have slightly different meanings based on their scope and use. So I thought to research and write about it. In this article, we will understand the two, the benefits, and the difference between the two.

LLMs (Large Language Model)

LLMs are a subset of foundation models, specifically designed to understand and generate human-like text. These models are trained on vast amounts of textual data and have billions or even trillions of parameters, enabling them to perform tasks like text generation, translation, summarization, and more. LLMs like GPT (Generative Pre-trained Transformer) and BERT (Bidirectional Encoder Representations from Transformers) are prime examples of this technology.

LLMs are primarily focused on tasks related to natural language processing (NLP). Their capabilities are largely language-based, including tasks like answering questions, generating coherent text, or translating languages.

Key features include:

- Relevance: They deeply understand language structure and semantics, allowing them to generate coherent and contextually relevant text.

- Versatility: LLMs can be fine-tuned for specific language-based tasks with relatively little additional data.

- Adaptability: Through techniques like few-shot learning, they can adapt to new tasks by seeing a few examples.

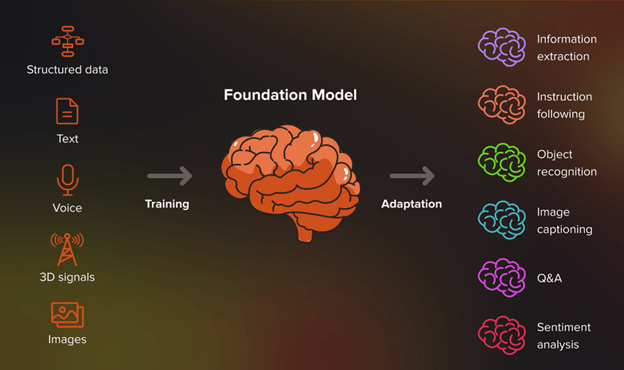

Foundation Models

Foundation models are a class of AI models pre-trained on vast data across various domains, enabling them to develop a wide range of capabilities. A foundation model could be something like GPT-4, which can be fine-tuned for NLP, or a multi-modal model like DALL-E, which generates images from text prompts.

Foundation models have a broader focus beyond just language. They are designed to serve as a general-purpose model that can be adapted for a wide variety of tasks, including language, vision, robotics, etc.

The critical characteristics of foundation models include:

- Universility: Through fine-tuning or few-shot learning techniques, they can be applied to a wide range of tasks beyond what they were initially trained on.

- Scalability: They are designed to scale with more data and computational resources, often leading to improved performance.

- Portability: The knowledge learned by these models can be transferred to various domains and tasks with minimal additional training.

So, what is the difference between the two.

Key Differences:

- Scope: All LLMs are foundation models (if they’re large enough), but not all foundation models are LLMs. Foundation models can be applied across a range of modalities, not just language.

- Modality: LLMs are specifically focused on language, while foundation models might handle a mix of text, images, or other data types.

- Use Case: LLMs are specialized for NLP tasks. Foundation models, being general-purpose, can be fine-tuned for a variety of tasks including NLP, computer vision, and more.

Conclusion

Large Language Models (LLMs) are a specific subset of foundational models focused on understanding and generating human-like text, excelling in natural language processing tasks. Foundational models, on the other hand, are broader and can handle multiple modalities like text, images, and audio, serving as general-purpose models adaptable to various tasks. While all LLMs are foundational models, not all foundational models are limited to language; they offer more versatile applications across AI domains.