Unlock Real-Time Analytics and Optimized Workflows with Databricks: A Dive into Streaming Tables and Materialized Views

AUDIENCE: Technical

LEVEL: Basic

The modern data landscape demands speed, scalability, and precision, especially as organizations seek to make real-time decisions. Databricks, with its Lakehouse architecture, continues to empower your data teams to meet these challenges through innovative tools like streaming tables and materialized views. These features, coupled with the AI-powered Databricks Assistant, provide an end-to-end solution for ingesting, transforming, and optimizing data workflows.

In this article, we’ll dive into how Materialized Views and Streaming Tables can empower your organization to overcome traditional challenges in data warehousing and analytics, offering a faster, more cost-efficient way to unlock business insights.

The Need for Smarter Data Pipelines

Data warehouses are the backbone of analytics and business intelligence (BI) workflows, but we all understand that they’re historically ill-equipped for handling streaming ingestion and real-time transformation.

Key challenges with Data warehouses:

- Dependency on External Tools: Analysts often rely on external resources for resolving data issues, delaying decision-making.

- Lagging BI Dashboards: Stale data and slow query performance hinder usability and insights.

- Costly Maintenance: Managing batch-based pipelines across separate systems is both expensive and prone to errors.

The Databricks Lakehouse Platform eliminates these barriers by unifying streaming and batch processes under one roof, offering up to 12x better price/performance for analytics workloads. Now, your SQL practitioners can directly address these challenges without needing third-party tools or complex configurations.

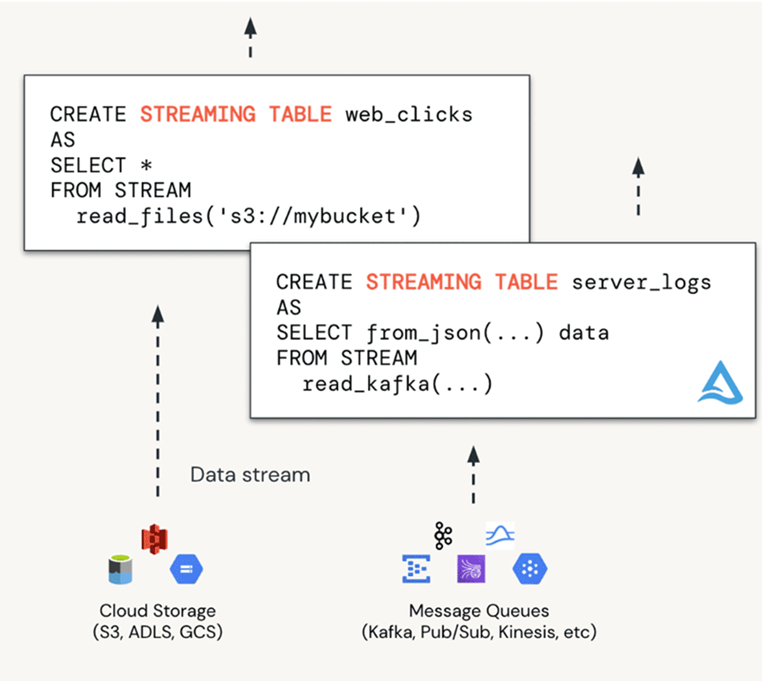

Streaming Tables: Real-Time Data Processing Simplified

Streaming tables serve as the backbone of real-time data ingestion within the Lakehouse ecosystem. Designed to handle continuously growing datasets, these stateful tables offer incremental processing capabilities, making them a go-to solution for high-volume workloads. Unlike traditional batch processes, streaming tables ensure that each data row is processed once, enabling efficient handling of large, dynamic datasets.

Their power lies in their simplicity. With SQL-based syntax, creating a streaming table is as straightforward as creating a regular table for any data expert.

Using commands like CREATE OR REFRESH STREAMING TABLE, your data engineers and analysts can seamlessly ingest data streams into the Lakehouse.

This functionality opens doors to a wide range of use cases for you, such as real-time analytics, business intelligence (BI), machine learning, and operational decision-making. This will help ensure that your organization stay ahead in today’s data-driven landscape.

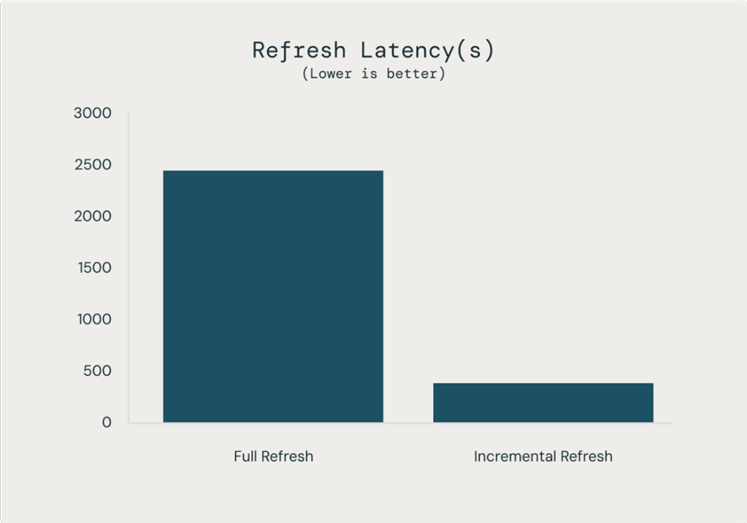

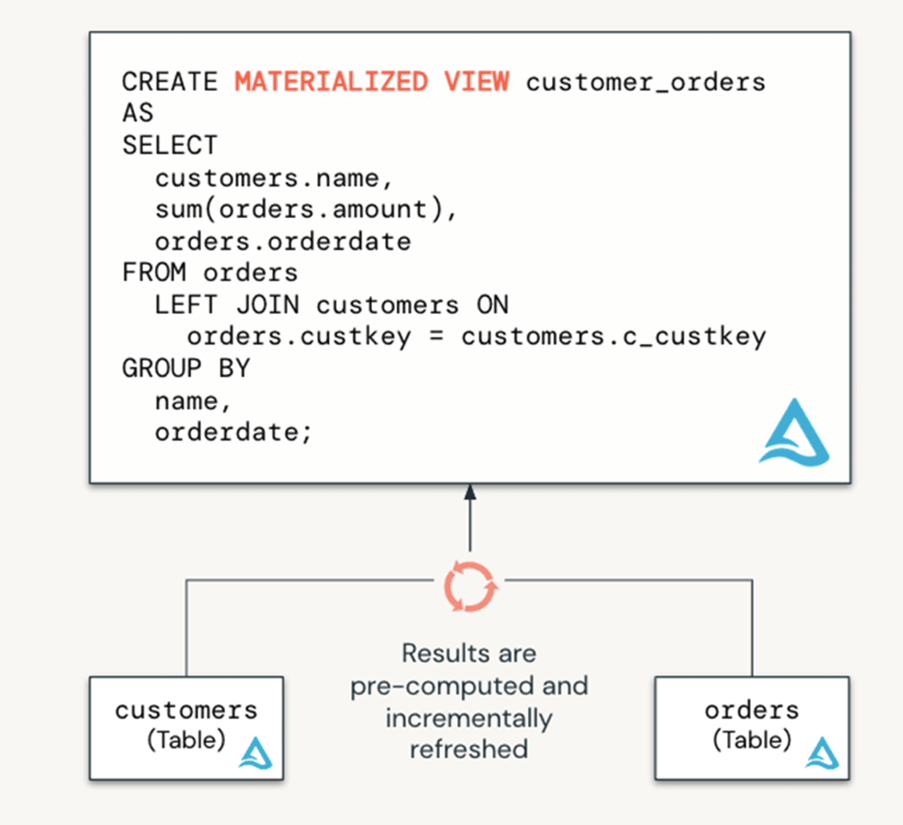

Materialized Views: Faster Queries, Lower Costs

While streaming tables excel at ingestion, materialized views optimize query performance. These views precompute and store the results of complex SQL queries, significantly reducing the time and resources required for subsequent queries. By incrementally refreshing as new data arrives, materialized views eliminate the need to rebuild computations. This really helps you when you have to rely on frequently accessed data in your BI dashboards and reporting.

Beyond performance, materialized views also enhance governance by allowing tighter control over base table access. This ensures that sensitive data remains secure while still enabling you to work with precomputed insights. With a few lines of SQL, you can create materialized views that balance speed, efficiency, and security, meeting the diverse needs of data teams.

Revolutionizing Data Workflows

Streaming tables, materialized views, and the Databricks Assistant form a powerful trifecta for modern data engineering. Streaming tables bring real-time analytics within reach, materialized views ensure fast and efficient query handling, and the AI-driven Assistant empowers users to tackle challenges confidently. Together, they simplify workflows, reduce operational overhead, and accelerate the path to insights.

Conclusion

As organizations navigate increasingly complex data ecosystems, tools like these are essential for staying competitive. Databricks provides the technology to process data at scale and makes it accessible to a wide range of users, from seasoned engineers to business analysts. By leveraging these capabilities, your organization can unlock new opportunities, drive innovation, and deliver meaningful results — all in real time.