How Transformer Models Power Large Language Models (LLMs) Like GPT, BERT, Dall-E, and T5 in Simple English

Transformer models have become the foundation* of large language models (LLMs) like GPT, BERT, Dall-E, T5…. Their unique architecture has unlocked new possibilities, making language models more powerful, efficient, and capable of handling complex tasks. Transformers are basically essential to the success of any LLMs implement today. In this article we will discuss why transformer models are revolutionizing how AI processes and generates language.

*Not to be confused by foundation model. You can read about foundation model in our other article here.

Mastering Context: How Self-Attention Unlocks the Power of Transformer Models

When we (humans) talk we understand the flow of a sentence and understand the relationship of the words. But this was not simple for older Machine Learning Models.

For example, consider the sentence:

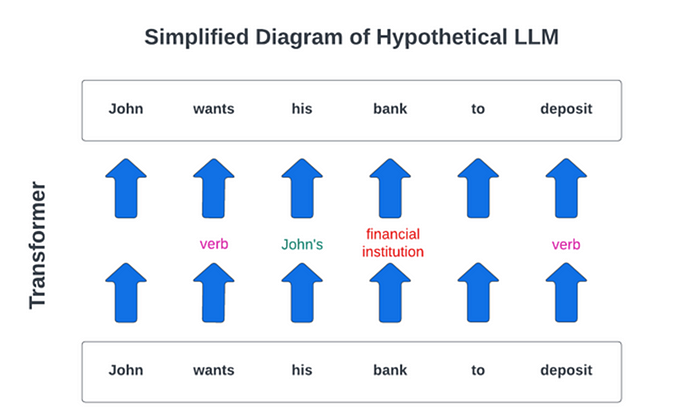

“John wants his bank to deposit.”

Older models* may have struggled to capture the relationship between “John” and “bank” because of the intervening words. But transformers understand this connection easily to deliver more contextually accurate outputs.

The secret sauce of transformer: Self-attention mechanism

At the core of transformer models is the self-attention mechanism. This process enables transformers to weigh the importance of each word in a sentence relative to the others, regardless of how far apart they are. Unlike older models, transformers have unique power to allow LLMs to analyze an entire sentence at once, understanding nuances and relationships between words much more effectively.

In addition, the ability to process all words simultaneously makes transformers highly efficient. This becomes more relevant in long or complex sequences. This is critical for LLMs, where speed and accuracy in understanding large amounts of text are essential.

*In older models like Recurrent Neural Networks (RNNs), context was harder to capture because they processed words in sequence.

The Scalability Edge: How Transformers Unlock the Secrets of Massive Data

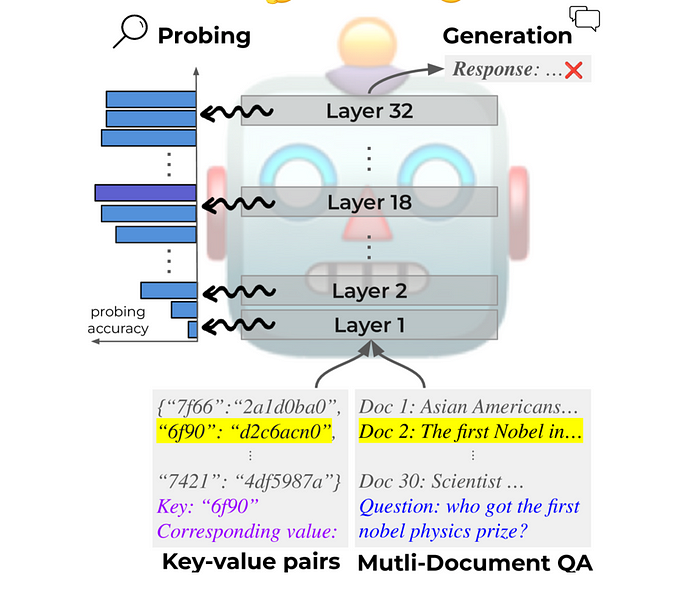

One of the greatest strengths of transformer models is their scalability. By adding more layers and parameters, transformers can handle massive datasets and uncover intricate patterns within the data.

This scalability allows models such as GPT, BERT, Dall-E or T5 to be trained on vast amounts of text, reaching billions or even trillions of parameters. The result is a language model that can handle sophisticated tasks, from generating creative content to performing detailed data analysis.

Wired for Context: Transformers Mimic Human-like Communication

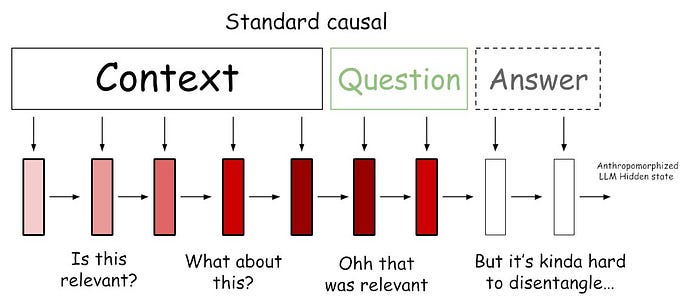

When we (humans) communicate we interpret words, phrase or sentence, not just based on meaning, but also based on context of previous conversation. Our brains are naturally wired to manage this.

Now, in case of natural language processing (NLP) this human-behavior translates to understanding the long-term dependencies in text. Just like our brain, transformers excel at managing these dependencies, preserving context even when processing long passages of text. This means LLMs like GPT can generate coherent and meaningful content, maintaining a natural flow even over extended conversations or paragraphs.

Sentence Structure: Decoding Word Order with Positional Encoding

Unlike older models, transformers don’t inherently understand the order of words in a sequence. To address this, they use a technique called positional encoding. Positional encoding helps the model keep track of word order, ensuring that phrases and sentences retain their proper structure. Without this, transformers might treat sentences like “The dog chased the cat” and “The cat chased the dog” as identical, which would lead to incorrect interpretations.

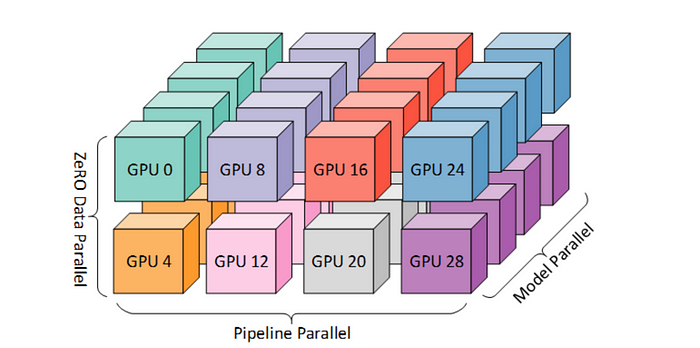

Processing Efficiency: Transformer Models Compute Leveraging Parallel Processing

Transformers also make efficient use of computational resources, particularly with modern hardware like GPUs and TPUs. They leverage parallel computation, allowing them to process data faster and more effectively than models that rely on sequential processing. This efficiency is key when training LLMs with enormous datasets, enabling them to learn faster and achieve better results.

Improved Domain Expertise: Transformers Enable Specialization through Pretraining and Fine-Tuning

Transformers play a crucial role in supporting transfer learning, which has revolutionized the AI world by allowing models to leverage pre-existing knowledge for improved performance on specific tasks. LLMs such as GPT , BERT, Dall-E or T5 are first pretrained on massive datasets to learn general language representations. Once pretrained, they can be fine-tuned for specific tasks, such as translation, summarization, or question-answering. This pretraining and fine-tuning process significantly improves the model’s performance across a wide range of applications.

For instance, after GPT is pretrained on a diverse dataset of internet text, it can be fine-tuned for specialized use cases like customer support, academic research, or content generation. The transformer architecture’s flexibility makes it adaptable across different domains, expanding its usefulness beyond just generic language tasks.

Conclusion

The transformer architecture has transformed how large language models such as GPT, BERT, Dall-E or T5 process, understand, and generate human language. With its self-attention mechanism, scalability, and ability to handle long-term dependencies, transformers enable LLMs to achieve deep contextual understanding and produce coherent, meaningful content. Add to this the efficiency of parallel processing and the adaptability through transfer learning, and it’s clear why transformers have become the backbone of cutting-edge AI.

As we move forward, the potential of transformers will only grow, driving advancements in natural language processing that could reshape industries, enhance user experiences, and unlock innovations in countless fields. The ability to scale these models and continue improving their efficiency and capabilities promises a future where AI becomes even more deeply integrated into our daily lives, from business operations to personal interactions.