Unlocking the Power of RAG: Balancing Instant Insights with Security — Expert Insights from Databricks Summit

LEVEL: Business and Technical

I had some fantastic conversations with some of the thought leaders in AI/ML space about the pressing challenges of securing Retrieval-Augmented Generation (RAG) systems at the Databricks Summit. The insights we discussed are crucial for anyone in the data industry, especially as we look toward the future of data accessibility and security. In this article I will share insight from Sanjeev Mohan from SanjMo, Sakat Saurabh, CEO of Nexla, Kamal Maheshwari, Co-Founder of DeCube, Lalit Suresh, CEO and Founder, Sarah Nagy, CEO of Seek.AI, Dr. Ali Arsanjani from Google Cloud, Brain Raymond of Unstructure, CEO & Co-Founder of Matthew Kosovec.

Democratizing Insights with LLM + RAG

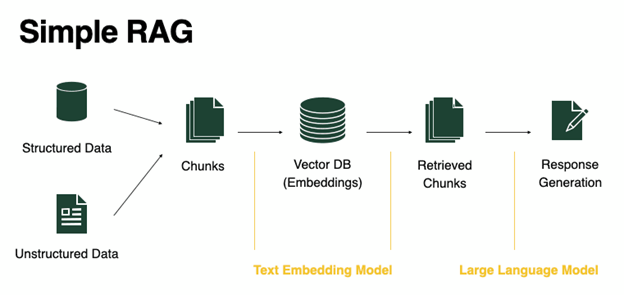

The combination of Large Language Models (LLM) and RAG is set to revolutionize the way we access and utilize data insights. Reflecting on my early career, creating a new Business Intelligence (BI) report on an on-premises data warehouse was a laborious process that could take up to three months. Fast forward to today, and tools like Dbt allow us to produce new data products in just days. This progress is remarkable.

However, the integration of LLM with RAG promises to take us even further. With these technologies, new insights will be available instantly, empowering everyone to become data-driven. This represents a significant leap in information processing capabilities. This all sounds incredible, right? However, speed of insights comes with it’s own security risks.

The Security Conundrum

Feeding these real-time insights requires complex queries that rely on vast amounts of data that must be securely managed. However, the growing frequency of cloud data breaches and the tightening of security regulations highlight the urgent need for improved data security measures. According to these experts, Data engineers already face significant challenges in maintaining up-to-date access controls, and the mere mention of ‘access management’ often induces a collective groan.

The current workflows and technologies for access management are not equipped to handle the demands of RAG. The indeterministic nature of RAG makes it nearly impossible to predict how information will be accessed through LLM + RAG. This unpredictability complicates the establishment of appropriate data access and security controls, leaving data engineers inundated with access requests and exposing organizations to substantial security risks.

A Call for Innovation

Clearly, the existing methods of access management are on the brink of failure under the weight of RAG systems. The data community must innovate to find new solutions that can secure these advanced data systems. Without a breakthrough in access management technology, the potential benefits of LLM + RAG could be overshadowed by the associated security vulnerabilities.

The conversation with these experts highlighted the critical need for a paradigm shift in how we approach data security in the age of RAG. It’s a challenge that requires urgent attention and collaborative problem-solving within the industry.

Conclusion

As we stand on the cusp of a new era in data processing and accessibility with LLM + RAG, the importance of securing these systems cannot be overstated. While the promise of instant, democratized insights is exciting, it brings with it a host of security challenges that we must address. The future of data-driven decision-making hinges on our ability to innovate and secure these advanced technologies effectively.

Let’s embrace this challenge and work towards a safer, more secure data future.